Researchers work with an increasing volume of scientific literature and ever more complex methodologies, and for this reason, they can rely on new support tools: Agent Laboratory, AgentRxiv, AI Scientist-v2, and Co-Scientist are AI-based systems designed to assist them in their work.

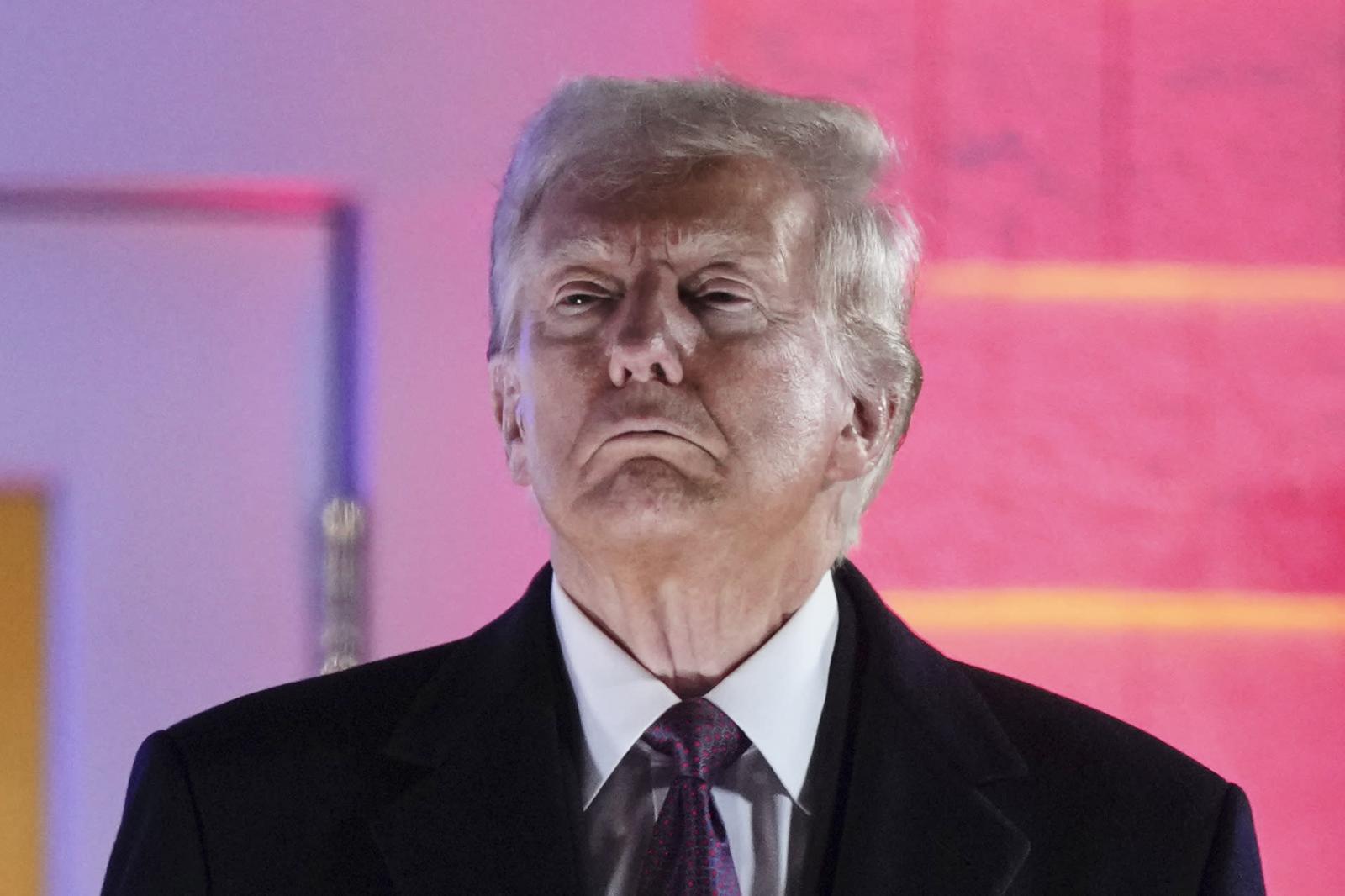

The landscape of contemporary scientific research presents several significant challenges. On one hand, the growing specialization requires increasingly vertical expertise, while on the other, the need for interdisciplinary studies demands the ability to navigate through different domains of knowledge. This paradox puts pressure on researchers, who must balance depth and breadth in their investigations.

At the same time, the number of scientific publications continues to grow at a steady pace. It is estimated that millions of new studies are published annually in peer-reviewed journals. This vast amount of information makes it difficult for any individual to keep track of all relevant developments, even within their own specific field.

Figure 1. Number of articles published in scientific journals, source: Openalex.org

Finally, the coding of experiments, data analysis, and report writing require technical skills that often divert researchers from conceptual work. A large portion of time is spent on repetitive tasks that could benefit from automation, such as literature review, programming standard experimental routines, or drafting and formatting documents.

In this context, artificial intelligence and large language models (LLMs) seem to be excellent candidates as research assistants. "Can LLMs Generate Novel Research Ideas?" is the title of an article that examined the ability of LLMs to generate original research ideas. The study, conducted by a team from Stanford University, involved over 100 researchers in the field of natural language processing (NLP) to evaluate the quality of ideas generated by both humans and LLMs. Each idea was blind-evaluated by expert researchers, following criteria such as novelty, feasibility, and effectiveness: the ideas generated by AI were judged to be more innovative than those from human experts (although more difficult to implement).

AI-based Research Agents: Agent Laboratory

Agent Laboratory was developed by Samuel Schmidgall from Johns Hopkins University, who led a research team at AMD, providing computational resources for the operation of the models. Agent Laboratory functions as a collaborative ecosystem of specialized LLM-based agents emulating different roles within a research laboratory. These include the PhD student, primarily responsible for literature review and result interpretation; the postdoc researcher, contributing to the formulation of experimental plans; the machine learning engineer (ML Engineer), responsible for data preparation and code implementation; and the professor, collaborating in the writing phase to synthesize the research findings.

The research process with Agent Laboratory consists of three key stages. The first is the literature review, during which agents gather and analyze relevant publications via the arXiv API. This phase helps contextualize the work and identify relevant methodologies. The second phase is experimental, which includes planning, data preparation, and executing experiments. Here, mle-solver (machine learning problem solver) comes into play, a specialized component that autonomously generates, tests, and refines machine learning code. This tool uses an iterative method, continuously evaluating results and making adjustments to improve performance. Finally, the third phase involves writing the scientific report, facilitated by paper-solver (scientific article solver), a module that synthesizes results into a standard academic format. The system generates structured documents according to scientific publication conventions, complete with abstract, introduction, methodology, results, and discussion.

Figure 2. How Agent Laboratory Works (AgentLaboratory.github.io)

The system can operate either fully autonomously, where agents sequentially proceed through all stages without human intervention, or in co-pilot mode (collaborative assistance), where researchers can provide feedback and guidance at each checkpoint of the process.

Evaluations conducted on the system by the research team led by Schmidgall have revealed promising results. The experiments involved a group of 10 volunteer PhD students who assessed the scientific articles autonomously produced by Agent Laboratory. These tests systematically compared the performance of different language models as processing engines, generating a total of 15 articles on 5 different research topics. The data shows that articles generated using OpenAI's "o1-preview" model were perceived as the most useful, with the best quality of documentation. The "o1-mini" model, also from OpenAI, scored highest for experimental quality. These differences highlight how the choice of language model significantly influences the quality of the research produced. The co-pilot mode, which integrates human feedback, showed significant improvements in the overall quality of the articles produced. Scores increased in various evaluation metrics, particularly in technical quality, clarity, and methodological rigor. This suggests that human intervention at strategic points in the process can substantially enhance the final results.

AgentRxiv: Towards Autonomous Collaborative Research

Despite the significant progress of Agent Laboratory, a fundamental limitation of autonomous research systems is that they operate in isolation. Schmidgall and his team have therefore given Agent Laboratory a companion, AgentRxiv, a platform that functions as a preprint server specifically for research produced by AI-based agents.

AgentRxiv, inspired by established digital archives like arXiv, bioRxiv, and medRxiv, facilitates knowledge sharing between autonomous laboratories. Unlike traditional archives that contain human research, AgentRxiv is designed "by agents for agents," allowing AI systems to cumulatively build on each other's discoveries.

The platform implements a similarity-based search mechanism that allows agents to retrieve the most relevant past research based on their queries. This targeted access to a growing database of agent-generated works promotes interdisciplinary knowledge transfer and accelerates scientific progress through iterative advancements.

Figure 3. The workflow of Agent Laboratory integrated into AgentRxiv (AgentRxiv.github.io)

Experiments conducted with AgentRxiv have empirically demonstrated the advantages of collaboration between artificial intelligence agents. One particularly significant study focused on the progressive improvement of reasoning techniques on the MATH-500 benchmark, a collection of 500 complex mathematical problems that serves as a standard for evaluating AI's reasoning capabilities. In these experiments, Agent Laboratory systems were tasked with actually solving the mathematical problems, developing and refining increasingly effective reasoning strategies through the sharing of their approaches on AgentRxiv.

Starting from a baseline accuracy of 70% (the initial result obtained using the "GPT-4o mini" model without advanced reasoning techniques), autonomous laboratories connected to AgentRxiv gradually developed and perfected reasoning algorithms. When the same experiments were conducted without access to AgentRxiv, performance stabilized around 73-74%, demonstrating the importance of cumulative knowledge for further progress.

Another notable aspect is the generalization of the algorithms discovered. The simultaneous divergence averaging (SDA) method, initially developed for MATH-500, showed consistent improvements on other benchmarks such as GPQA (university-level questions), MMLU-Pro (questions requiring deeper and more specialized comprehension across various domains), and MedQA (complex medical questions). The most significant improvements were observed on MedQA and for models with lower initial baseline values, highlighting the robust adaptability of simultaneous divergence averaging. The strength of this technique lies in its ability to approach a problem from multiple angles simultaneously, reducing the likelihood of systematic errors that can occur when using a single reasoning method.

Also interesting are the results obtained with parallel research: a method where multiple artificial research laboratories work simultaneously on similar problems, sharing their findings. In this experiment, three independent Agent Laboratory systems, configured identically but operating simultaneously with asynchronous (non-simultaneous) access to AgentRxiv, achieved a maximum accuracy of 79.8% on MATH-500, surpassing the performance observed in the sequential experimentation, where only one laboratory worked at a time. Moreover, intermediate milestones such as 76.2% accuracy were reached after only seven articles in the parallel setup, compared to 23 articles required in the sequential setup. This demonstrates how collaboration among multiple artificial research systems can significantly accelerate scientific progress.

AI Scientist-v2 and AI Co-Scientist

In addition to Agent Laboratory, other autonomous research systems have recently made significant progress. AI Scientist-v2, developed by Sakana AI, is an autonomous scientific research system capable of generating original ideas, conducting experiments through an "agent tree" (a tree-based exploration method where each node represents a potential experiment, allowing agents to investigate multiple possibilities in parallel and dive deeper into the most promising hypotheses). This system produced the first fully AI-generated paper accepted at a workshop of the International Conference on Learning Representations after peer review.

Meanwhile, Google has introduced AI Co-Scientist, a multi-agent system built on Gemini 2.0 that uses a "generate, debate, evolve" paradigm to formulate scientific hypotheses. Its effectiveness was demonstrated in the biomedical field, where it identified promising drug candidates for acute myeloid leukemia, new targets for liver fibrosis, and summarized discoveries on antimicrobial resistance mechanisms in just two days.

Empirical Benefits of AI Agent Collaboration

Agent Laboratory and AgentRxiv, AI Scientist-v2, and AI Co-Scientist represent a paradigm shift in how we conceive the relationship between artificial intelligence and scientific research. Rather than replacing human researchers, these systems aim to serve as enhanced assistants, automating repetitive tasks and allowing scientists to focus on the creative and conceptual aspects of research.

This symbiosis between human and artificial intelligence could significantly accelerate the pace of scientific discovery. Researchers can delegate the in-depth literature review, implementation of experimental routines, and documentation of results to AI agents, freeing up time for formulating new hypotheses, interpreting data, and engaging in interdisciplinary synthesis.

Furthermore, the demonstration that AI agents can collaborate through platforms like AgentRxiv suggests the possibility of a new form of collective science, where AI systems contribute to the evolution of knowledge in ways that complement human intelligence.

However, important issues also emerge that need to be considered. Ethical concerns regarding the potential generation of misleading scientific content or the propagation of biases in results require further reflection. Robust governance mechanisms must be developed to ensure that these systems produce content aligned with ethical principles and social values.